We are interested in developing principled design methods and algorithms that shall enable robots and other engineered systems to communicate and collaborate with each other, learn from their experiences, and hence achieve enhanced autonomy and performance. Our work combines dynamic systems and control theory with machine learning and network communication, while emphasizing both fundamental theoretical research and experiments on physical hardware platforms. Our research can broadly be classified into the areas of Learning Control and Networked Control.

Some example research projects are described below. Please refer to our Publications for more details and latest results. Most of the research is in collaboration with colleagues from the Autonomous Motion Department (see here for the department’s research overview).

Popular science descriptions of our research (in German) have appeared in Bild der Wissenschaft ("Wenn es was zu sagen gibt ", Nov. 2014, pp. 20-23) and Jahrbuch der Max-Planck-Gesellschaft ("Lernende Roboter ", May 2015).

Research keywords: Control Dynamic Systems State Estimation Machine Learning Networks Cyber-physical Systems Robotics

Bayesian Optimization for Feedback Controller Tuning

Main collaborators: Alonso Marco Valle, Philipp Hennig, Jeannette Bohg, Stefan Schaal

Autonomous systems such as humanoid robots are characterized by many feedback control loops operating at different hierarchical levels and time-scales. Designing and tuning these controllers typically requires significant manual modeling and design effort and exhaustive experimental testing. It is thus desirable to transfer some of the control design efforts to automatic routines. In this project, we have developed a framework where an initial multivariable controller is automatically improved based on observed performance from a limited number of experiments. The auto-tuning framework combines optimal control design with Bayesian optimization. In particular, we have used the Entropy Search algorithm, which represents the latent control objective as a Gaussian process and suggests new controllers so as to maximize the information gain from each experiment. The video demonstrates how this framework enables a humanoid robot to learn to balance a stick of unknown length.

Find more information on the project webpage .

Select Publications

Gaussian Filtering as Variational Inference

Main collaborators: Manuel Wüthrich, Cristina Garcia Cifuentes, Jeannette Bohg, Stefan Schaal

Real-time control and decision making typically requires knowledge of some variables of interest (often the system state). In most practical cases, the state is, however, not observed directly and must be inferred from noisy measurements. Probability theory and Bayesian inference provide the theoretical underpinnings for this process, which is called filtering or state estimation. Most commonly used estimation algorithms, such as the Kalman Filter or the Extended Kalman Filter, belong to the family of Gaussian Filters (GFs). Based on a novel interpretation, we have extended the standard GF to allow for more expressive approximations of the state distribution and thus improved filtering performance for many nonlinear problems, while maintaining its favorable computational complexity. This is relevant, for instance, for learning of model parameters along with estimating the system state, which can be seen as a form of a combined learning and control problem.

Find more information on the project webpage .

Select Publications

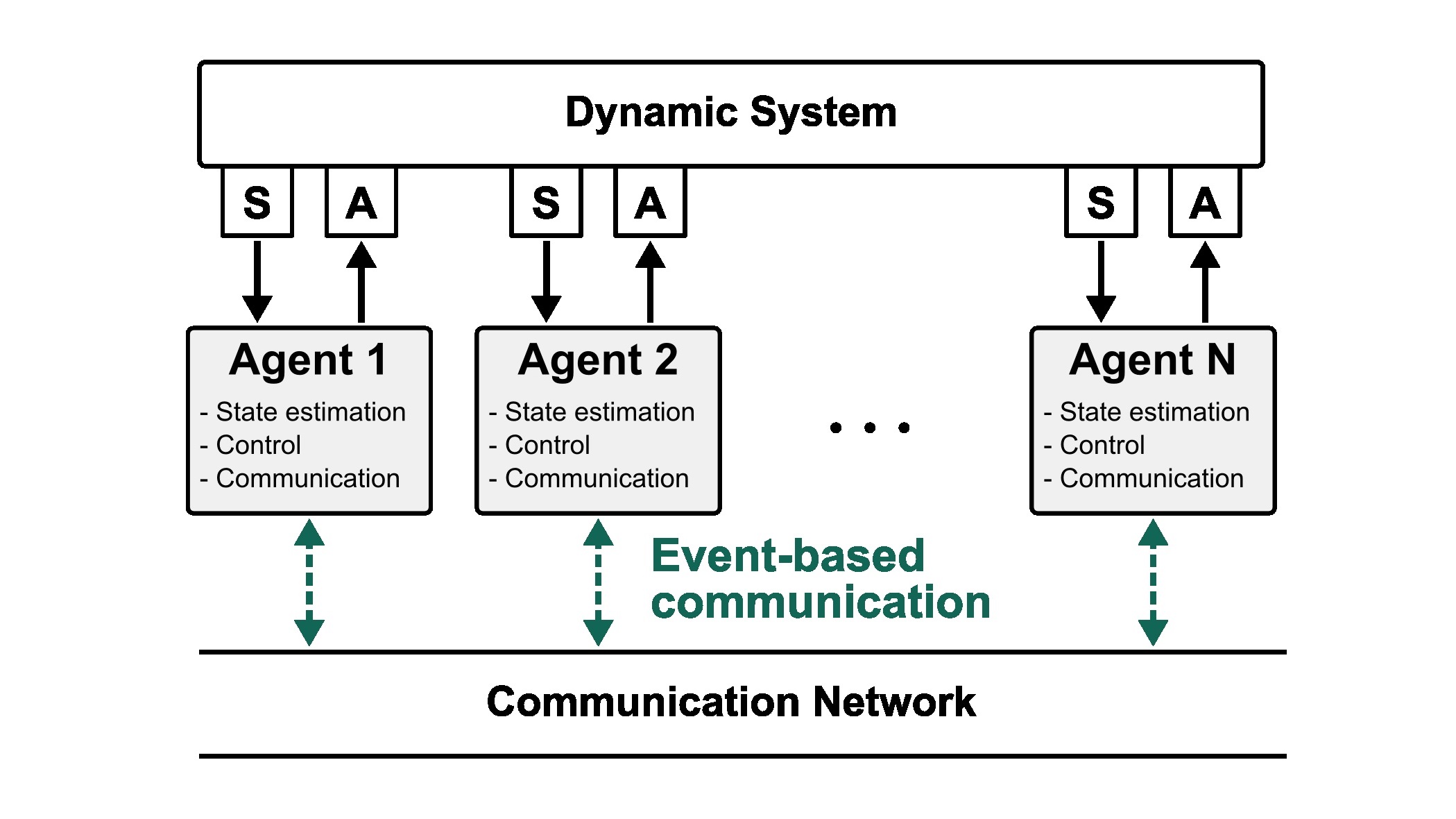

Event-based State Estimation and Control

Main collaborators: Michael Mühlebach, Simon Ebner, Marco C. Campi, Raffaello D’Andrea

In almost all control systems today, data is exchanged between hardware components (sensors, computers, actuators) periodically at fixed updated rates. Thus, system resources such as computation and communication are used at predetermined times, irrespective of the state of the system or the information content of the data. This becomes prohibitive when resources are limited such as in networked control systems, where multiple controller agents share one communication network. Excessive communication can then lead to network congestion, increased delays, data loss, or waste of bandwidth and energy. Event-based methods thus aim at replacing periodic communication and processing with more flexible and adaptive schemes where data is transmitted or processed only when necessary (e.g. when an error passes a threshold level). For a networked system with distributed control agents (see figure), we have developed event-based methods, where each agent automatically transmits data only when this is relevant for the other agents. With these algorithms, communication rates adapt to the need for feedback and network resources can thus be saved, which we have demonstrated, for example, on the Balancing Cube (see video below).

Find more information on the project webpage .

Select Publications

Distributed Control / The Balancing Cube

Main collaborator: Raffaello D’Andrea

When multiple controllers make independent decisions, yet are linked through system dynamics, shared resources, or a common objective, one has the problem of distributed control. Control design for distributed problems is known to be more challenging than for a centralized architecture. With the Balancing Cube, we developed and built a unique research test bed, which combines the above challenges.

The Balancing Cube is a two-meter-tall dynamic sculpture that can balance autonomously on any one of its edges or corners (see video). It owes this ability to six rotating arms located on its inner faces. Each of the arms is a self-contained control unit with onboard power, sensors, a computer, and a motor. The controllers communicate with each other over a shared network and coordinate their decisions to keep the cube in balance. In addition to being used as a test bed for research on distributed state estimation and control, we have used the cube to explain our research to the general public during several exhibitions.